How do you test code designed to run on a large and expensive computing cluster? How do you reproduce complex, time-dependent emergent behaviour of a cluster of network components?

A discrete event simulation is like the event loop you find in asynchronous applications, but simplified. Instead of waiting on all sorts of file descriptors, there is only a simulated “sleep” operation, which wakes up at a specified time.

I built an event simulator in PHP and used it to test 5000 lines of unmodified production code.

I used PHP’s fibers to avoid the need to rewrite the code into an asynchronous event-driven pattern. Fibers are like threads in that they have their own stack, but they are run in a single physical process and are only suspended when they request it. Fibers allow a kind of cooperative multitasking.

When the code under test wants to do a database query, a mock database subclass does a simulated sleep and then returns.

public function query( $sql ) { $this->eventLoop->sleep( 0.1 ); return new FakeResultWrapper( [] ); } |

The simulated sleep queues an event in the future, then suspends the currently running fiber. The event loop then gets the next event (the one with the lowest timestamp), sets the current timestamp to the one specified in the event, and resumes the event’s fiber.

If the code under test wants to know the current time, we have to arrange for it to get the simulated time from the event loop. But the relevant classes already had a way to do this, to support unit testing.

To start up a new simulated thread, like a request being handled, we make a new fiber and run a function in the context of that fiber. The time between requests follows an exponential distribution, just like uncorrelated events in reality.

public function makeRequests() { while ( true ) { $delay = RandomDistribution::exponential( $this->rate ); $this->eventLoop->sleep( $delay ); $this->eventLoop->addTask( [ $this, 'handleRequest' ] ); } } public function handleRequest() { $client = $this->getRandomClient(); $db = $client->getLoadBalancer()->getConnection( DB_REPLICA ); $db->query( 'SELECT do_work()' ); } |

This was easy and worked surprisingly well. The main challenge was dealing with the large amount of data generated by the simulation. I added a set of metrics classes and a system for writing their state to a CSV file. I used a spreadsheet to process and plot the data.

Administrative tasks, like reporting the current state of the metrics, are also done using fibers.

public function report() { while ( true ) { $this->recordTimeStep(); $this->eventLoop->sleep( $this->timeStep ); } } |

To terminate the simulation, we throw an exception in every fiber.

private function terminateFibers() { foreach ( $this->fibers as $fiber ) { if ( $fiber->isSuspended() ) { try { $fiber->throw( new TerminateException ); } catch ( TerminateException $e ) {} } } } |

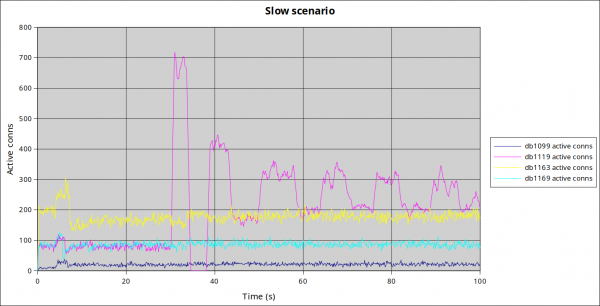

The scenario I tested has 139 application hosts serving about 7500 requests per second. The application tries to balance load across 11 replica database hosts, which have latency and configured load weights derived from production data. I can inject fault conditions and see how the load is rebalanced.

This scenario typically has about 800 fibers active at any given time. This only requires a few hundred megabytes of RAM, so I can comfortably run it on my laptop. It takes about 6 seconds of real time to run 1 second of simulated time.

Hey that’s cool. I want to use your event simulator for my thing.

If you’re working on MediaWiki, contact me and we’ll talk about your needs.

If you’re working on some other thing and need a generic event simulator for PHP, please study my code and either fork it or use it for inspiration. Ideally turn it into a nice Composer library and let me know when it is ready for everyone to use.