When you shine a laser on a wall, the laser light seems to sparkle. A weird, shifting pattern of random dots appears in the illuminated spot. When you move your head, the pattern shifts around, seeming to follow you. The random dots seem to get larger as you move further away from them. This weird effect is called laser speckle. It is caused by interference patterns created when the coherent light strikes a finely textured surface — particularly textures of approximately the same size as the wavelength of light. It is a little window into the microscopic world.

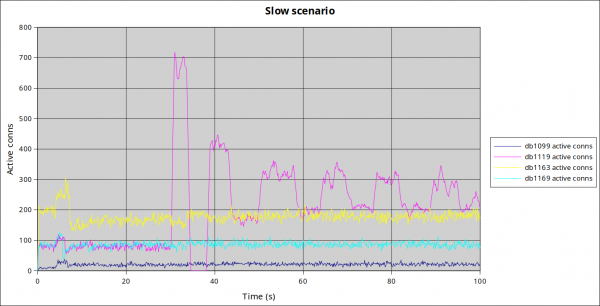

A loop of 30 infrared image frames captured using a Kinect. Look closely at the hand, you can see the laser speckle pattern change.

If you shine a laser on your hand, the places where more blood is flowing beneath your skin will seem to sparkle more vigorously — changing more often, with a finer-grained pattern. This is because the light is bouncing off your blood cells, and when they move, they cause the speckle pattern to shift around.

You can use a camera to record this, and with computer assistance, quantitatively determine blood flow. This is an established technique in medical diagnosis and research, and is called laser speckle contrast imaging, or LSCI.

An awesome paper by Richards et al written in 2013 demonstrated that LSCI could be done with $90 worth of equipment and an ordinary computer, not thousands of dollars as researchers had previously been led to believe. All you need to do is shine a laser pointer, record with a webcam, and compute the local standard deviation either in time or space. I assumed when I first read this about a year ago that hobbyists would be falling over each other to try this out, but this appears to not be the case. I haven’t been able to find any account of amateur LSCI.

The University of Texas team, led by Andrew Dunn, previously provided software for the interpretation of captured images, but this software has been withdrawn and was presumably not open source. Thus, I aim to develop open source tools to capture and analyse laser speckle images. You can see my progress in the GitHub project I have created for this work.

The Wikimedia Foundation have agreed to reimburse reasonable expenses incurred in this project out of their Wellness Program, which also covers employee education and fitness.

Inspiration

My sister Melissa is a proper scientist. I helped her with technical aspects of her PhD project, which involved measuring cognitive bias in dogs using an automated training method. I built the electronics and wrote the microcontroller code.

She asked me if I had any ideas for cheap equipment to measure sympathetic nervous system response in dogs. They were already using thermal cameras to measure eye blood flow, but such cameras are very expensive, and she was wondering if a cheaper technique could be used to provide dog owners with insight into the animal’s behaviour. I came across LSCI while researching this topic. I’m not sure if LSCI is feasible for her application, but it has been used to measure the sympathetic vasomotor reflex in humans.

Hardware

Initially, I planned to use visible light, with one or two lenses to expand the beam to a safe diameter. Visible light is not ideal for imaging the human body through the skin, since the absorption coefficient of skin falls rapidly in the red and near-infrared region. But it has significant advantages for safety and convenience. The retina has no pain receptors — the blink reflex is triggered by visible light alone. But like visible light, light from a near infrared laser is focused by the eye into a tiny spot on the retina. A 60mW IR laser will burn a hole in your retina in a fraction of a second, and the only sign will be if the retina starts bleeding into the vitreous humour.

I ordered a 100mW red laser on eBay, and then started shopping for cameras, thinking (based on Richards et al) that cameras capable of capturing video in the raw Bayer mode would be easy to come by. In fact, the Logitech utility used by Richards et al is no longer available, and recent Logitech cameras do not appear to support raw image capture.

I’ll briefly explain why raw Bayer mode capture is useful. Camera manufacturers are lying to you about your camera’s resolution. When you buy a 1680×1050 monitor, you expect it to have some 1.7 million pixels each of red, green and blue — 5.3 million in total. But a 1680×1050 camera only has 1.7 million pixels in total, a quarter of them red, a quarter blue, and half green. Then, the camera chipset interpolates this Bayer image data to produce RGB data at the “full” resolution. This is called demosaicing.

Cameras use all sorts of different algorithms for demosaicing, and while we don’t know exactly what algorithm is used in what camera, they all make assumptions about the source image which do not hold for laser speckle data. Throw away the signal, we’re only interested in the noise. Our image is not smoothly-varying, we want to know about sharp variations on the finest possible scale.

Ideally, you would like to use a monochrome camera, but at retail, such cameras are perversely much more expensive than colour cameras. I asked the manufacturer about the technical details of this cheap “B/W” camera. They said it is actually a colour image sensor with the saturation turned down to zero in post-processing firmware!

Enter the Microsoft Kinect. This excellent device is sold with the Microsoft Xbox. I bought one intended for the Xbox 360 (an obsolete model) second hand for $25 AUD plus postage, then replaced the proprietary plug with a standard USB plug and DC power jack.

This device has an IR laser dot pattern projector, an IR camera with a filter matched to the laser, and an RGB camera. Following successful reverse engineering of the USB protocol in 2010-11, it is now possible to extract IR and raw Bayer image streams from the Kinect’s cameras.

The nice thing about the Kinect’s IR laser is that despite providing about 60mW of optical power output, it has integrated beam expansion, which means the product as a whole is eye-safe. To homogenize the dot pattern, you don’t need lenses, you can just use a static diffuser.

When you capture an IR video stream at the maximum resolution, as far as I know, the firmware does not allow you to adjust the gain or exposure settings. The IR laser turns out to be too bright for near-field work. So it’s best to use a static diffuser with an integrated absorber to reduce the brightness. Specifically, masking tape.

The optical rig used to capture the IR video at the top of this post.

Mathematics

My implementation of the mathematics mostly follows a paper by W. James Tom, from the same University of Texas research group . This paper is behind a paywall, but I can summarize it here. Speckle contrast can either be done spatially (spatial variance of a single image) or temporally (variance of a location in the video stream through time) or a combination of these. I started with spatial variance.

You calculate the mean and variance of a rolling window, say 7×7 pixels. This can be done with the usual estimator for sample variance of small samples, with Bessel’s correction:

\(s^2_I = \frac{N \sum\limits_{i=1}^N I_i^2 – \left( \sum\limits_{i=1}^N I_i \right) ^2}{N \left( N – 1 \right)}\)

where \(I_i\) is the image intensity.

To find the sum and sum of squares in a given window, you iterate through all pixels in the image once, adding pixels to a stored block sum as they move into the window, and subtracting pixels as they fall out of the window. This is efficient if you store “vertical” sums of each column within the block. I think it says something about the state of scientific computing that to implement this simple moving average filter, convolution by FFT multiplication was tried first, and found to be inefficient, before integer addition and subtraction was attempted.

The variance is normalized, to produce speckle contrast \(k\):

\(k = \frac{\sqrt{s^2_I}}{\left\langle I \right\rangle}\)

where \(\left\langle I \right\rangle\) is the sample mean. From this, the correlation time as a proportion of the camera exposure time \(x\) can be found by numerically solving:

\(k^2 = \beta \frac{e^{-2x} – 1 + 2x}{2x^2}\)

For small k, use

\(x \sim \frac{1}{k^2}\)

For large k, precompute a table of solutions and then apply a single iteration of the Newton-Raphson method for each new value of k.

Finally, plot 1/x.

Results

It’s early days. We get a big signal from static surfaces which scatter the light heavily. Ideally we would filter that out and provide an image proportional to dynamic scattering. There is a model for this in Parthasarathy et al . Alternatively we can do temporal variance, sometimes called TLSCI, since this should be insensitive to static scattering. After all, you can see the blood flow effect with unaided eyes in the video. The disadvantage is that it will require at least 1-2 seconds to form an image.

One of the first things I did after I connected my Kinect to my computer was wrap a rubber band around one finger and had a look at the video. The reduction in temporal variance due to the reduced blood flow was very obvious. So I’m pretty sure I’m on the right track.

Future work

So far, I have written a tool which captures frames from the Kinect and writes them to a TIFF file, and a tool which processes the TIFF files and produces visualisations like the one above. This is a nice workflow for testing and debugging. But to make a great demo (definitely a high-priority goal), I need a GUI which will show live visualized LSCI video. I’m considering writing one in Qt. Everything is in C++ already, so Qt seems like a nice fit.

The eBay seller sent me the wrong red laser, and I still haven’t received a replacement after 20 days. But eventually, when I get my hands on a suitable red laser, I plan on gathering visible light speckle images using raw Bayer data from the Kinect’s RGB camera.

References